Extract Tables from Pdf using Python

Guide to get tables from PDFs using Python

Oct 24, 2023 | Read time 9 minutes

📗 Table of contents

Introduction

Have you ever had the problem of trying to extract table data from PDFs with Python.

Initially this sounds like a pretty standard job when working on any data analysis project, but ends up being a pain in the backside!!

I found that there could be many issues that can come up when we go down this path:

- Finding libraries that could extract the contents and figuring out the tables. Not all libraries are equal, some perform better than others (Tabula, PdfPlumber, etc).

- Manage installations and packages. This can be a pain when you are using different versions, managing dependencies, etc. Additionally some packages comes with their own bugs and have not been actively maintained!

- Making sure the code is optimized. More often when dealing with large PDF files, you will end up with CPU and memory management.

My solution was to create Pdfdodo to help with this. PdfDodo comes with a generous free tier so would be suitable for small projects!

In this post I will go over how we do this with Python and calling the PdfDodo API. There are also OSS Python alternatives you can also take (PdfPlumber and Tabula).

Writing your own PDF table detection algorithm

Now if you decide to go down the path of creating our own algorithm, we would need to consider:

- Normalize our PDFs - making sure all pages are rotated to upright orientation.

- Finding out separator lines and grids - this is crucial in defining the structure of a table

- Determining table areas in the document - detecting and identifying these table areas is necessary to extract the relevant data.

- Determining the row and column structure that needs to be defined for our table. This involves identifying the number of rows and columns in the table.

- Setting the header rows of the table - recognizing and defining these header rows will improve our table extraction.

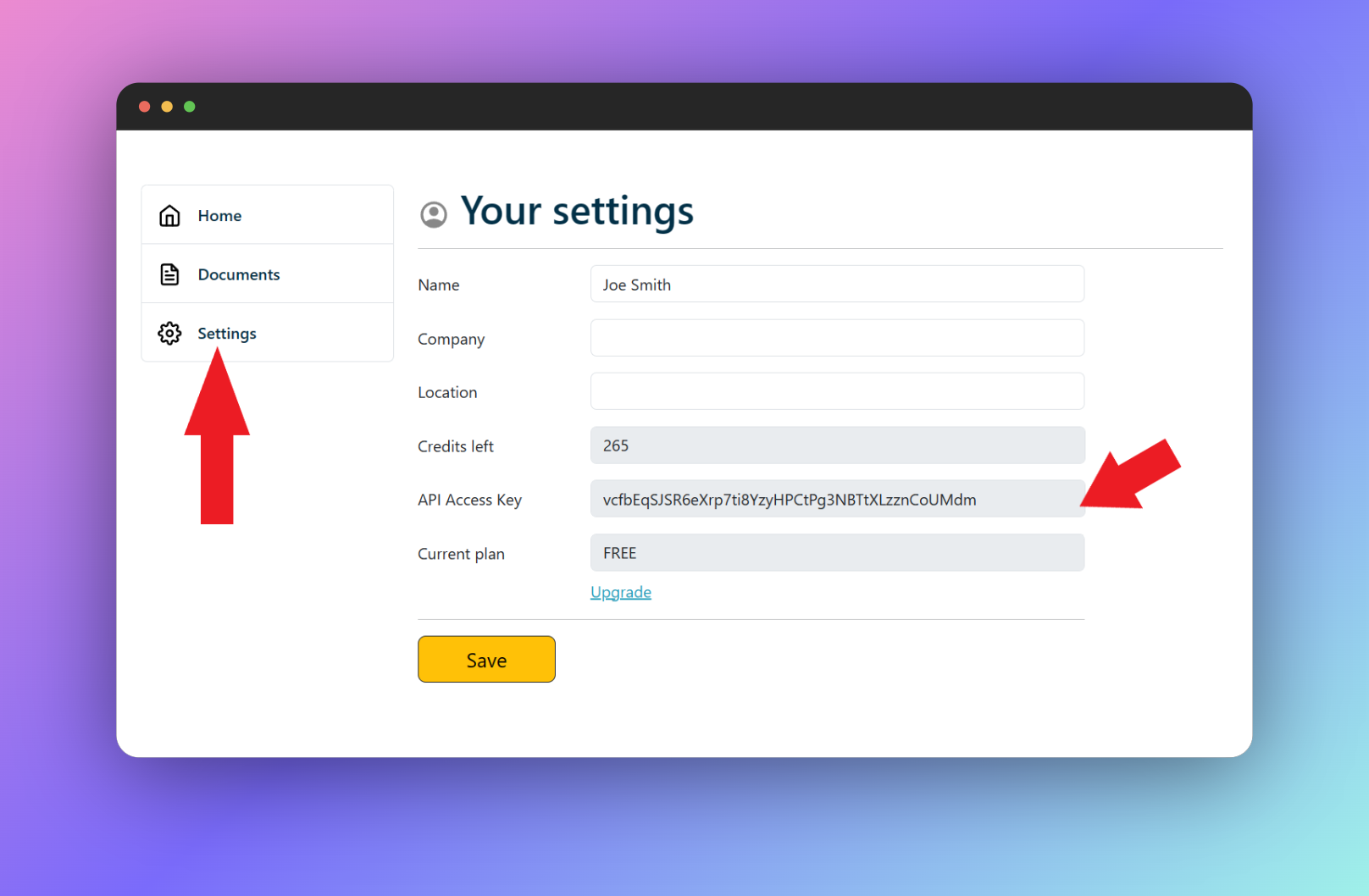

Step 1: Get Pdfdodo API Key

PdfDodo uses a API keys to allow you to access the API. You can get your API key by going to the “Settings” section.

After retrieving your API key, you can pass the token to the API in the HTTP Authorization Header using X-API-KEY.

X-API-KEY: <apikey>Step 2: Upload a PDF file

The next step after we have successfully grabbed our API key, we can upload our document and then call the analyze endpoint to get the data.

Make sure to add X-API-KEY in the Authorization Header.

To upload our PDF file, we can do this in Python as follows.

pip install requestsAfter we have installed the requests library, use the following code to call the Upload file endpoint.

import requests

url = "https://app.pdfdodo.com/api/uploadfile"

headers = {

"Content-Type": "multipart/form-data",

"x-api-key": "YOUR_API_KEY"

}

files = {

'file': ('file.pdf', open('/path/to/your/file.pdf', 'rb'))

}

response = requests.post(url, headers=headers, files=files)The response will be a JSON result. For example:

{

"documentId": "123e4567-e89b-12d3-a456-426614174000",

"documentName": "your-test-file.pdf"

}Keep note of the documentId.

Step 3: Call the analyze endpoint

The last step is to do a GET request to the analyze endpoint, /api/analyze, to get the document data.

For example, lets say our document id is 123e4567-e89b-12d3-a456-426614174000, we can do the following Python code to analyze and get the document data:

import requests

# API endpoint

url = "https://app.pdfdodo.com/api/analyze?documentid=123e4567-e89b-12d3-a456-426614174000"

# Headers

headers = {

"Content-Type": "application/json",

"x-api-key": "YOUR_API_KEY_HERE"

}

# Making the GET request

response = requests.get(url, headers=headers)

# Printing the response

print(response.text)This will give you a JSON response of the data in your PDF:

{

"tables": [

{

"title": "The title of your table",

"tableId": "a0f229f6-ade7-4637-bbff-e31fbbd64ec5",

"pageNumber": 1,

"rows": [

[

"0",

"Money In ",

"$1,880.00 "

],

[

"1",

"Money Out ",

"$150.25 "

],

[

"2",

"You Received ",

"$1,729.75 "

]

],

"left": 0.6953286,

"top": 0.2655254,

"width": 0.2580709,

"height": 0.11655326,

"mergedTables": []

}

]

}Alternative 1: Use Tabula library

Now if you want to use a OSS library, Tabula would have to one of the biggest (and probably older) table extract libraries.

It was written in Java so to get it working in Python, we have use the ported version: tabula-py.

tabula-py is a simple Python wrapper of tabula-java, which can read table of PDF. You can read tables from PDF and convert them into pandas’ DataFrame. tabula-py also converts a PDF file into CSV/TSV/JSON file.`

To get started, we need to install the Python tabula-py library with the following command:

pip install tabula-pyNow we also need to make sure that Java is installed. Additionally check that you can use java command on your terminal (eg setting the PATH).

import tabula

# Read pdf into list of DataFrame

dfs = tabula.read_pdf("test.pdf", pages='all')

# Read remote pdf into list of DataFrame

dfs2 = tabula.read_pdf("<url to pdf>")

# convert PDF into CSV file

tabula.convert_into("test.pdf", "output.csv", output_format="csv", pages='all')

# convert all PDFs in a directory

tabula.convert_into_by_batch("input_directory", output_format='csv', pages='all')Results

I found the results of Tabula to be ok.

Some issues I found:

- For PDFs featuring tables with vertical headers, Tabula often misses some header content.

- In cases where PDF tables have indistinct lines, Tabula tends to misalign data points, shifting them leftward.

- When a column spans across multiple cells, Tabula may realign certain top-right headers to the left.

- For unconventional tables, such as form-like structures, Tabula might not provide any output.

- If the table is oriented counter-clockwise, the output from Tabula is not reliable.

- With tables where rows stretch over multiple cells, Tabula might shift some data points leftward.

- For PDFs containing two tables on one page, Tabula’s output can be inconsistent.

Alternative 2: Using PdfPlumber library

PdfPlumber is another library that I was playing around with. We can get it from here:

https://pypi.org/project/pdfplumber/

To get started, install with pip

pip install pdfplumberWe then can use the following code:

import pdfplumber

# Path to your PDF

pdf_path = "/path/to/your/pdf.pdf"

# Open the PDF

with pdfplumber.open(pdf_path) as pdf:

# Loop through all the pages in the PDF

for page in pdf.pages:

# Extract tables from the current page

tables = page.extract_tables()

# Loop through all tables

for table in tables:

# `table` is a list of lists, where each

# inner list represents a row in the table.

for row in table:

print(row)

print("---- End of Table ----")Results and notes

- Pdfplumber internally uses pdf2image, which requires poppler-utils. Ensure you have it installed in your system.

I found PdfPlumber does not do a great job in the following scenarios:

- The table contains vertical text, leading to messed up header text.

- The table is rotated, resulting in unusable output.

- Two similar tables are present on one page, causing unusable output.

- Tables have two or more columns with no values.

Final thoughts

Extracting data out of PDFs, using Python, can be a pain in the backside. We can use already existing libraries like Tabula or PdfPlumber.

We can use the PdfDodo API to extract data out of PDF files and into CSV or JSON format with Python and the requests library.

If you end up going down the path of Open Source libraries, you will have full control of the code.

These OSS libraries are great and have been around for a while. However, when you go down this path you may be faced with problems like:

- making sure using the right versions and dependencies - eg the right Python version, Java runtime, etc

- checking that the code is optimized for CPU and memory usage. This can happen with big PDF files.

- dealing or working around existing bugs.

Additionally the limitation of these libraries are that they are optimized for text based PDFs and not scanned ones. In that case, you will also need to introduce a OCR library!

👋 About the Author

G'day! I am Ken Ito a software engineer based in Australia. I have worked as a software engineer for more than 10 years ranging from roles such as tech lead, data analyst to individual contributor. I have experience in creating code (python, javascript) to extract data from PDFs for ETL and financial statement analysis for accounting purposes.

During those years, I have worked for Government departments, companies like NTT (Nippon Telegraph and Telephone), Bupa and not-for-profit organizations.

3 Ways to Convert Pdf to Excel Without Losing Formatting

Sep 21, 2023Step by Step to convert your scanned PDFs to Excel without losing formatting.

Extract Capital One Credit Statement as Excel

Oct 12, 2023Tips to export your Capital One Credit Statement as Excel

Top 3 ways to Download Monzo Bank Statement as CSV

Oct 12, 2023Reviews of the top 3 ways to Download Monzo Bank Statement as CSV